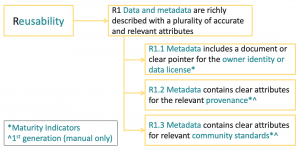

Reusability Maturity Indicators

Discover how to apply the FAIR Maturity Indicators to measure the REUSABILITY of the data and metadata.

- Reusability of data is compared with your FAIR objectives to identify and make improvements in an iterative manner

Overview

Implementation of the FAIR guidelines is measured by a framework of metrics which are now termed as Maturity Indicators (MI) [1, 2, 3]. This method is focussed on the maturity indicators to measure the findability of the data and metadata. It is a questionnaire for manual evaluation of the FAIR MIs for Reusability which are recorded in the FAIRsharing registry [4,5]. It is important to understand that FAIR is intended to be aspirational. This means that any FAIR evaluation MIs are used to understand how to improve the FAIRness of the data.

The FAIR MIs are now reaching 2nd generation maturity as a result of community feedback and the need to automate FAIR evaluation, which is available as a public demonstrator developed by Mark Wilkinson and collaborators [6, 7]. The 2nd generation MIs have been adopted for this method to prepare for automated evaluation when they are ready for production usage by industry. All currently available FAIR evaluation tools and services have been compared by Research Data Alliance [8] who have recently released the FAIR Data Model: specification and guidelines [9].

The MIs for Reusability are illustrated below:

How To

This method is a questionnaire for manual evaluation of data Reusability.

- Is there a license document for both the data and its associated metadata which is retrievable by humans?

- Indicates the presence of a human-readable pointer in the metadata to the data owner or license. This supports the legitimate reuse of the data with relative ease.

- Example: Identity of the data owner or license URL

- DOI: 10.25504/FAIRsharing.fsB7NK (FM-R1.1)

- Indicates the presence of a human-readable pointer in the metadata to the data owner or license. This supports the legitimate reuse of the data with relative ease.

- Does the metadata provide a pointer to disclose the owner or license to the data which is readable by machine?

- Indicates the presence of a machine-readable pointer in the metadata to the data owner or license. This supports the legitimate reuse of the data with ease.

- Example: Identifier for the data owner or license URL

- DOI: 10.25504/FAIRsharing.YAdSwh (Gen2-MI-R1.1)

- Indicates the presence of a machine-readable pointer in the metadata to the data owner or license. This supports the legitimate reuse of the data with ease.

- Is there detailed provenance information available for the data?

- Indicates whether there is provenance information associated with the data.

- Examples: These should cover at least two primary types of provenance information:

- Who, what and when the data was produced (e.g. citation) and

- Why and how the data was produced (i.e. context and relevance).

- Several identifiers are likely to be required:

- An identifier which points to a vocabulary or source to describe citational provenance (e.g. Dublin core or lab notebook reference).

- Another identifier which points to a vocabulary (likely domain-specific) to describe contextual provenance (e.g. Experimental Factor Ontology).

- Examples: These should cover at least two primary types of provenance information:

- DOI: 10.25504/FAIRsharing.qcziIV (FM-R1.2)

- Indicates whether there is provenance information associated with the data.

- Does the data and metadata meet the standards required by the community or a particular application?

- Indicates if the data and metadata meet the standards required by the community or a particular application.

- Example: Identifier for local or public guidelines for “minimal information about…”

- DOI: 10.25504/FAIRsharing.cuyPH9 (FM-R1.3)

- Indicates if the data and metadata meet the standards required by the community or a particular application.

References and Resources

- The FAIR metrics group repository on GitHub at fairmetrics.org

- Wilkinson et al 2018 A design framework and exemplar metrics for FAIRness. Scientific Data volume5, Article number: 180118 DOI: 10.1038/sdata.2018.118

- Supplementary information for Wilkinson et al 2018: https://github.com/FAIRMetrics/Metrics/tree/master/Evaluation_Of_Metrics

- FAIR Maturity Indicators and Tools: https://github.com/FAIRMetrics/Metrics/tree/master/MaturityIndicators

- FAIRsharing registry search for FAIR metrics: https://fairsharing.org/standards/?q=FAIR+maturity+indicator

- Second generation Maturity Indicators tests: https://github.com/FAIRMetrics/Metrics/tree/master/MaturityIndicators/Gen2

- A public demonstration server for The FAIR Evaluator: https://w3id.org/AmIFAIR

- Research Data Alliance 2020 Results of an Analysis of Existing FAIR assessment tools: https://preview.tinyurl.com/yausl4s4

- Research Data Alliance 2020 FAIR Data Maturity Model: specification and guidelines: https://preview.tinyurl.com/y5tgby6w

Resources

- RDA FAIR Data Maturity Model

- Specification, guidelines and evaluation (manual sheet)

At a Glance

Related methods

- Findability FAIR Maturity Indicators

- Accessibility FAIR Maturity Indicators

- Interoperability FAIR Maturity Indicators

Setting

- Evaluation of reusability to improve the FAIRness of the data and metadata

Team

- Scientist generating or collecting the data and metadata

- Data steward for advice and guidance

Timing

- 0.5 day to answer the questions and faster if evaluation is automated

- Additional time will depend on implementation of the FAIR improvements

Difficulty

- High

Resources

Top Tips

- How FAIR are your data? Checklist by Sara Jones & Marjan Grootveld

- While one of the MIs for the reusability method is implementable by machine, currently, it is mostly evaluated manually.

- The FAIR MIs are not an end in themselves; they indicate how data can be made more FAIR.

- Choices on which FAIR improvement to implement should be driven by feasibility and likely benefit, including return on investment.