FAIR data by design – Roche

Enabling transformationless data integration and automated FAIR Assessment

- Provision of a FAIR data catalog for data discovery and data access.

- Implementation of a FAIR end-2-end data management value chain for data sets offering transformation-less data integration.

- Application of FAIR principles not only to data but also application and API development.

Overview

Roche has a wealth of clinical and exploratory patient data for many indications collected over several decades. In 2017, a company-wide cross-functional and multi-year program was launched to transform our data management strategy and to create a corporate data culture: the EDIS (Enhanced Data and Insight Sharing) initiative. The key objective was to accelerate the development of new medical treatments by implementing the FAIR principles and sharing data across the organization.

At Roche, clinical datasets are being collected by many different parts of the organization and stored in many different systems. The data modalities of the clinical datasets included, but were not limited to, omics data, imaging data, biomarker data, study data and digital biomarker data. FAIRification has been the underlying methodology to standardize and harmonize the datasets facilitating their integration and secondary usage. We have also invested significant effort to curate the data to make them FAIR and to increase the data quality.

The challenge was to semantically integrate the different datasets into a single harmonized dataset catalog. The added value of this integrated portal is to provide a single entry point for data discovery (find & access) rather than having to search multiple interfaces separately.

This led to the creation of an integrated data portal, the Roche Dataset Portal (RDP), where the datasets can be automatically tagged with all their rich metadata which can then be used for data discovery and data access. The primary design goal of the portal is to ensure a complete implementation of the FAIR principles including the data portal application itself, the API implementation and the datasets searchable in the user interface.

Process

The Roche Dataset Portal (RDP) followed an integrated approach applying the FAIR principles to the data provided by the downstream applications holding the data sets but also to the application itself and to the APIs sending the data. While FAIR typically refers to FAIR data, the necessity to implement applications which are FAIR by design and able to hold data which are born FAIR is mostly not recognized. The same applies to FAIR APIs ensuring the harmonized exchange of FAIR metadata and data across application boundaries.

As outlined in the FAIR toolkit the FAIR principles define the guidance how true implementations look like. The FAIR maturity indicators then allow you to measure the FAIRness based on the definitions and some instructions on how to assess FAIRness using e.g. a five star rating system. For the application design a couple of criteria are of specific importance:

- The systematic usage of Global Unique Persistent and Resolvable Identifiers (GUPRI) for data and metadata.

- Provision of rich metadata to describe digital objects (such as provenance, governance metadata, technical metadata etc)

- The usage of community vocabularies to capture metadata and data instead of creating proprietary resources (SKOS, Dublin Core, Provenance Ontology but also domain specific resources like Human Phenotype Ontology, Experimental Factor Ontology or SNOMED).

- The usage of formal and expressive languages to represent FAIR resources (RDF, RDFS, OWL) to ensure the integration of digital and machine-actionable metadata and data.

1 The Roche Dataset Portal as a FAIR application

As stated above, offering a FAIR application implies that it is based on a harmonized, standardized and FAIR metadata model. At the same time the application needs to be able to store FAIR data. Unfortunately, many if not most of the currently available vendor or in-house solutions do not support true FAIR implementations as they cannot cope with GUPRIs or reuse external reference data for terminologies and schema definitions.

RDP is an application that entirely follows the FAIR principles. The RDP meta model is entirely specified using FAIR standards and community vocabularies and transparently published with WIDOCO.

The core of the RDP metamodel consists of Data Catalog Vocabulary (DCAT) to describe data sets. We have used many other community vocabularies to extend the meta model including the Dublin Core Metadata Initiative (DCMI) metadata terms and types, the Provenance Ontology (PROV) and many others (see Figure 1). If ,and only if, no community standards were available we carefully expanded the meta model with self-defined classes and properties.

In addition, we leveraged the Roche Terminology System for the representation of FAIR reference data to harmonize concepts (see also below).

We performed a FAIR assessment of the application using a five star rating for all four dimensions and the global score. The Roche Data Set portal scored 4.75 out of 5 in the overall score.

Finally, the FAIR and machine-actionable meta model allowed us to offer an automated calculation of the FAIR score for all data sets in the portal based on a transparent evaluation matrix.

We can conclude that the architecture of the Roche Dataset Portal is FAIR by design.

Figure1: Representation of the core meta model driving the Dataset Portal.

2 Implementing an end-2-end FAIR value chain

The Roche Dataset Portal is a catalog of data sets of various data types including clinical data, imaging data or omics data. When building the EDIS ecosystem (the set of applications to implement the entire data management value stream) Roche has used its FAIR reference data services for terminologies (RTS) and clinical standards (Global Data Standards Repository – GDSR) to prospectively harmonize data and metadata.This approach ensures that data are born FAIR. As a consequence, concepts related to substances, indications, clinical trial phases are identical in all data catalogs as they systematically use the same GUPRIs. It has been a significant initial effort to align on the governance of the reference data and to ensure consistency across data sets. However, there is a strong economy of scale as the same concepts are used many times in different applications.

The Roche Dataset Portal in return shares a superset of all concepts contained in the reference terminologies of the downstream applications to guarantee interoperability when integrating the various data sets.The biggest advantage is that the integration into the portal comes without any additional integration and transformation effort. The end-to-end FAIRification results in fully machine-actionable data and metadata. The semantic glue comes with the FAIR APIs.

3 FAIR APIs based on JSON-LD

A key component to a truly FAIR ecosystem, FAIR APIs, is typically overlooked. In modern architecture, we try to break up huge monolithic systems into components, abstraction layers and microservices. The exchange of data and metadata is handled by APIs. Even if our data and metadata are FAIR, we are almost back to square zero if our APIs do not exchange the data sets between applications using a FAIR approach. This is why FAIR APIs are mandatory.

JSON-LD is a specialization of JSON providing the semantics of attributes and the FAIR namespaces in a context. Everything contained in the payload is represented by a resource / GUPRI. By doing so we turn JSON output into a notation for RDF.

JSON-LD can be easily translated into any other RDF notation. JSON-LD can also be used to transfer or to federate knowledge graphs. The Smart API project developed an OpenAPI-based specification for defining the key API metadata elements and value sets.

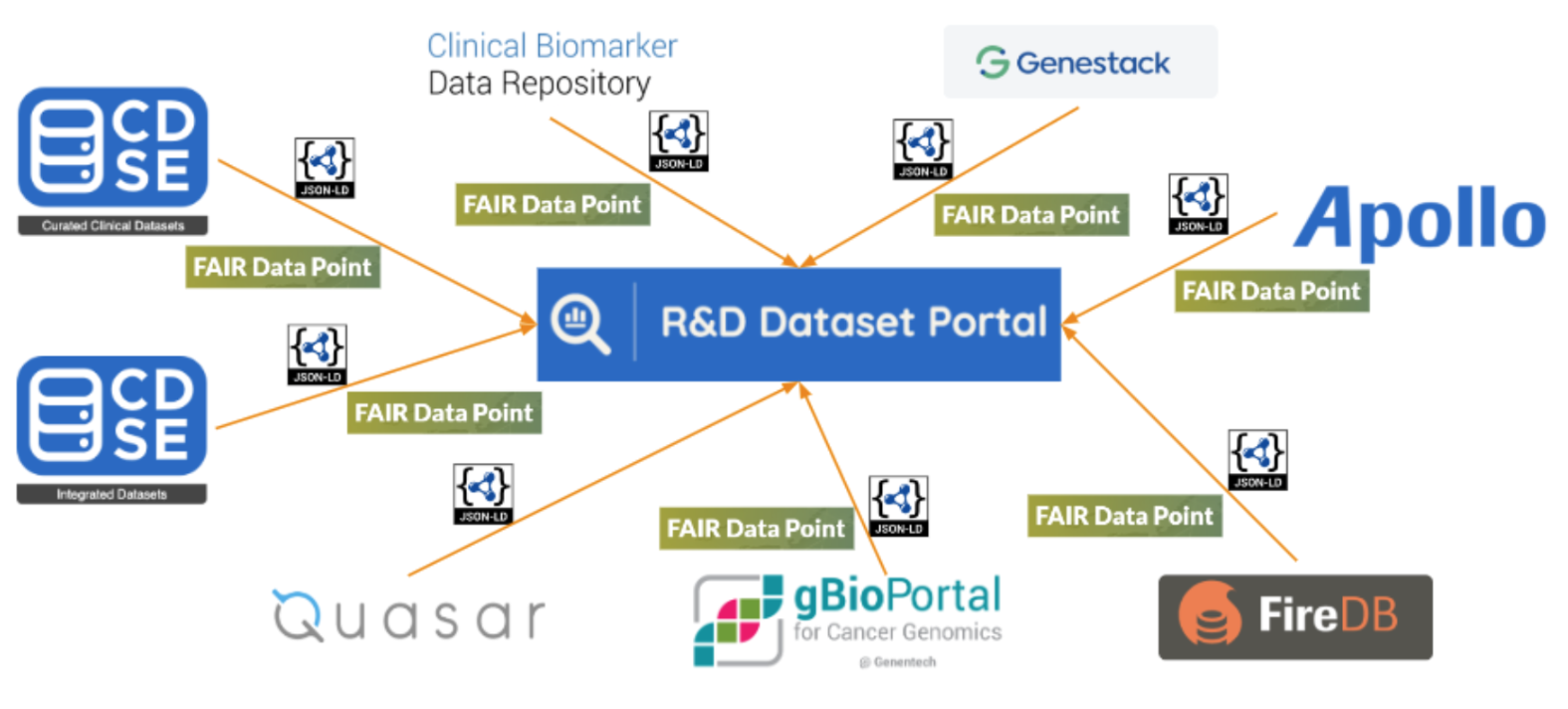

In the implementation of RDP, we have introduced a remarkable change. Typically, a consuming application has to adapt to the downstream by interpreting API payloads or building connectors requiring data engineers. For RDP, we have inverted the logic. All downstream applications provide the same JSON-LD endpoint as a FAIR data point. Standardizing and harmonizing FAIR data points at industry scale could revolutionize the process of acquiring, internalizing and exchanging data.

Figure 2 below illustrates this approach of the Roche Dataset Protal and the downstream applications

Figure 2: Transformationless data integration based on fully FAIRified machine-actionable data and dataset models.

In the middle there is a representation of the R&D Dataset Portal. Around the portal there is a selection of data catalogs sending their data sets to the portal representing clinical data, biomarker data or omics data. Each of these systems has implemented a FAIR Data Point using JSON-LD to provide the data and metadata for the data sets so that it can be natively read into the portal without ETL or map and merge. Everything has been harmonized and standardized across all repositories.

Table 1: Extract of community vocabularies used for the meta model of the Dataset Portal

Outcomes

The Roche Dataset Portal as a FAIR data catalog has a couple of unique value propositions. First of all, we clearly demonstrate that FAIRification at scale along the entire data management value chain is absolutely feasible. The user gets an integrated view of almost 20,000 sets for a variety of data types such as Imaging, Clinical Studies, Real World data, Omics data or Biomarkers. All data assets have a unified and harmonized meta model for data and metadata based on the FAIR principles allowing for a unique data discovery experience and cross-data types like Clinical studies and RWD.

The integrated architecture applied to the entire ecosystem allows for a transformation-less consumption of the data sets into the RDP data catalog. No ETL, no map-and-merge are needed.

The usage of FAIR APIs based on JSON-LD are crucial to success as all downstream applications expose the same FAIR data points.

Finally, the portal introduces product thinking. In a normal IT setting the consuming application needs to cope with a variety of interfaces putting the burden of integration on the consumer. In our approach, the data producer makes the data assets available for immediate consumption. Thus, the data producers are responsible to offer easy access and distribution of their data sets.

Additional Information

Roche has been working on a cross-functional strategic multi-year initiative to harmonize, standardize and integrate large amounts of data assets to make them available for secondary usage. The entire undertaking has been driven by the ubiquitous application of the FAIR principles including more than 20 applications to capture, integrate and distribute data assets. Each of these applications has been FAIRified using our reference data service which themselves are FAIR. By doing so we have crafted an integrated architecture spanning the entire data management value chain which is FAIR by design so that all data are born FAIR.

This required an unprecedented level of collaboration between the teams in the involved functions at a global scale and the provision of FAIR reference data serving all the required standards and terminologies. As an outcome, Roche can use an overwhelmingly large number of data sets representing different data types.

Embedding the FAIR principles in all processes and applications has been the key success factor to deliver the desired outcome. The Roche Dataset Portal addresses all the aspects of the FAIR principles. Starting with the use of FAIR data model, RDP uses community vocabularies as much as possible to address interoperability, has come up with ways to display and quantify internal Access guidelines, an automated method to calculate the FAIR score and JSON-LD API endpoint for applications to publish into RDP. Thus the design of RDP ensures a complete and true FAIRimplementation.

References and Resources

Web sites:

- http://www.w3.org/ns/dcat#

- http://purl.org/dc/dcmitype/

- http://purl.org/dc/terms/

- http://xmlns.com/foaf/0.1/

- http://purl.org/pav/

- http://www.w3.org/ns/prov#

- http://www.w3.org/2004/02/skos/core#

- https://smart-api.info/guide

At a Glance

Team

Team(s) – the Dataset Portal core team consisted of about 15 people for the design, implementation but also for the FAIRifcation by providing FAIR reference data.

Acknowledgements:

Fabien Richard, Silvia Jimenez, Roy Weiler, Yelena Budovskaya and Mark McCreary.

Timeline

Timeline: about one year for setting up the global teams, two years for the execution, the processes are embedded in the organization now and the Roche Dataset Portal grows continuously.

Benefits and deliverables

Data assets are now interoperable, reusable and easy to find and to access. Processes have been streamlined and more effective while at the same time delivering high-quality, harmonized and standardized data.

Author

Martin Romacker, Rama Balakrishnan, Oliver Steiner, Hugo De Schepper, and YuChi Kuah

Top Tips

- Tip 1: Think bold and do not be in the defensive position. The question is: Can we still afford not being FAIR!

- Tip 2: It is a myth that prospective FAIRification is more expensive. It requires more effort in the beginning but then pays back multiple times.

- Tip 3: FAIR everywhere: FAIR applications, FAIR APIs, and FAIR data by systematic usage of GUPRIs and community standards.